I recently learned of the Knuckleheads (yes that is their name) initiative. On the surface they advocate for more competition in search engines. However, after digging deeper what they are really about is enabling said competition through the creation a new, “US Bureau of the Shared Internet Cache.” And how? By essentially nationalize Internet search, specifically by confiscate Google’s successful Internet crawler search cache.

Now, past-readers of this blog are aware that I’m not a fan of Big G and 99% of the time I use Bing. However, it is said that two wrongs don’t make a right.

The beef is supposedly about the de facto preference of millions and millions of Web site owners – some big some tiny in terms of Web site traffic volume. Some of them explicitly give preference to Big G via their robots.txt configuration file disallowing (blocking) all the others! They claim that these independent site owners are biased and present detailed documented evidence.

The first problem is that what Knuckleheads call “bias” is the site owner’s freedom of choice. Predictably, the search wannabe’s don’t like that and presume to know better.

Lipstick on the Pig

All told, what these search engine wannabes are pushing to do is a really bad idea but all too familiar rent-seeking. Instead of developing something better they seek special privilege by appropriating the successful incumbent.

Here are a few reasons to consider that suggest darker motives:

- Robots.txt can be ignored by crawlers (think red herring)

- Calls for either outright property theft and/or a massive taxpayer gift to Big G

- Terrible precedent for more and new digital grifters

- Unintended consequence of likely disinvestment

First, robots.txt it truly is an optional guideline for crawlers and bots. According to Moz.com: “Some user agents (robots) may choose to ignore your robots.txt file.” New ones in stealth-mode, student CS projects as well as black hats ignore it. That means the supposed rationale doesn’t even jive.

Most Web sites with freely available content only explicitly prevent access unless through user authentication controlled by the Web server. Everything else is essentially “come and get it.” Would-be competitors to Big G can and do crawl Web sites which translates into serious misdirection on what Knuckeheads is really about. Kinda’ shady.

Second, the purported essential facilities doctrine that is referenced is a euphemism that economically resembles eminent domain in that government force is used for the taking. The difference is that eminent domain is laid out in the 5th amendment and requires just compensation. It makes sense that would-be competitors and their investors would be on board.

Perhaps less immoral is that Big G might get compensated for the taking. With real estate that is easy enough to do with recent comparables to establish price to be paid out for seizing the asset. How would the price be established for Big G’s Internet crawler cache? Politicians, bureaucrats and eager competitors of course. How would that be paid for? Most likely: either or both baking cost into some budget that is covered by the taxpayers or worse still, pumping up the money supply just a little bit more. It is also not hard to imagine the process becoming punitive as well, e.g. a one-time fine, covering legal costs or maybe ongoing administrative fees. As to which Faustian bargain, it just depends on the legal argument.

Next, would be the setting of a precedent, i.e. the path being cleared for even more Internet regulations built on the tortured logic of essential facilities. By ginning-up government enabled franchises – what could go wrong? For professional lobbyists this is a feature not a bug.

Last, as usual there are unintended consequences of such privitization of gain (the windfall to the Big G wannabes) at public cost (all searchers). In recent years economics has circled around to the idea of externalities which can be good as well as bad. Consider the example of demand shifts in the price of alcohol vs education. More expensive booze is supposedly better for society (definitely better for government revenues) while a more educated populace has “spillover effects.”

In their defense, the main positive externality of Big G is that everyone benefits from everyone else’s search behavior via their Page Rank algorithm. With regard to the Knukleheads, they want to put at risk the future quality of search to clearly benefit themselves and MAYBE benefit some abstract Internet searchers. Once the cache becomes public one problem gets solved but several others pop-up. For Big G, the incremental benefit of spending on crawler cadence, better algorithms or speedy servers just went through the floor. That means the future state is seriously put at risk with worse quality (file under: Tragedy of the Commons).

End Result: Flexible Ethics

In the Knucklehead dream world, US taxpayers/Internet users trade the technical availability more search choice (that they were always free to use beforehand) from new players in exchange for a more invasive/powerful government bureaucracy, combined with either abject theft of private property or a big gift of cash to Big G (that said US taxpayers pay for.) Plus, the added benefit of likely disinvestment by Big yielding worse search quality in the future.

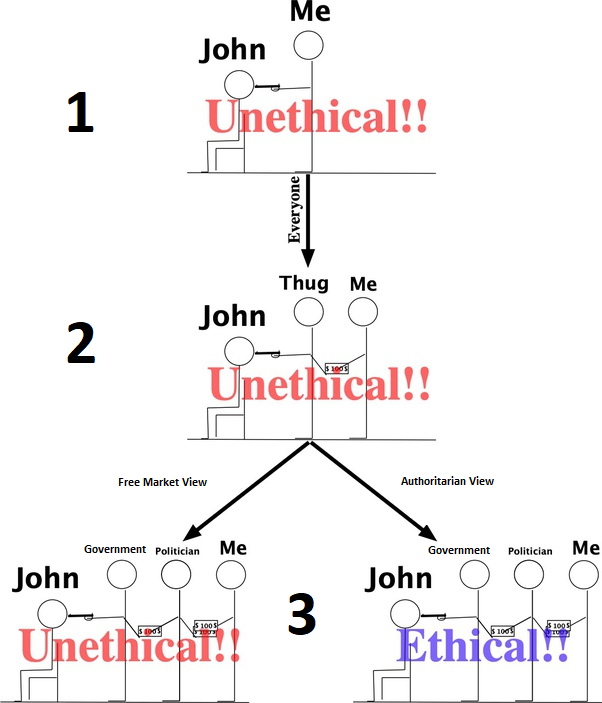

These flexible ethical standards are illustrated by the diagram below. Nearly everybody understands that #1 is immoral behavior: they will not put a gun to John’s head to force him to give up his possessions. Further they also get that it us wrong to pay a3rd party intermediary to do it as shown in #2. They still get that it is immoral, criminal and wrong for society. However, when we engage a 4th entity the ethics change and far too many are now OK with force being used.

Knuckleheads purport that their position is ethical – it is not. If they somehow stole the technology we’d all see it for what it is; even if they had an offshore team or hackers do the dirty work it would still be plain to see. Only by whitewashing through a 4th party, one that is supposed to be disinterested (the government) is it possible to pull this off. Knuckleheads is #3 plain and simple.

Alternatives

Disappointing then that there isn’t much in the way of free market solutions being proposed. They could incent site owners or Big G to modify their behavior. Behavioral economics and game theory could likely solve this problem without the heavy hand and nasty unintended consequences. Some thought-starters:

- Open source consortium offering alternative cache

- Tax incentives to make it more appealing for Big G (and any others) sharing cache or cloud server capacity

- Crowdfunding campaign to support the effort

- Education of the market

- Etc…

I ran across the above framework which is telling and relevant. Seeking a monopoly from the government suggests is the strategy used by weak competitors and weak co-operators. Not a good look.

Not that I say it lightly, it would be better to break it up into many smaller businesses and force more competition/fairer dealings within the advertising, analytics, hardware, consumer products and data businesses. That is a tall order since nobody is forced to use Big G. Sadly, it is easier for Knuckleheads to find some contemptible career politician looking for a cause and a donation.